Research

At Teesside University I am part of the Interpretable and Beneficial Artificial Intelligence research group, and my research activity aims at bridging AI, Game Theory, and Complex Networks for making Inferences, and getting interpretable and explainable decisions.

Below I illustrate the main motivations, research questions and concepts, methods and data characterising part of my research activity.

Motivations

The target has been to gain a better understanding of the complex dynamics of human behaviours, such as social or dietary behaviours, social contagion processes, epidemic spreading, etc., unveiling the impact of hidden human-related factors.

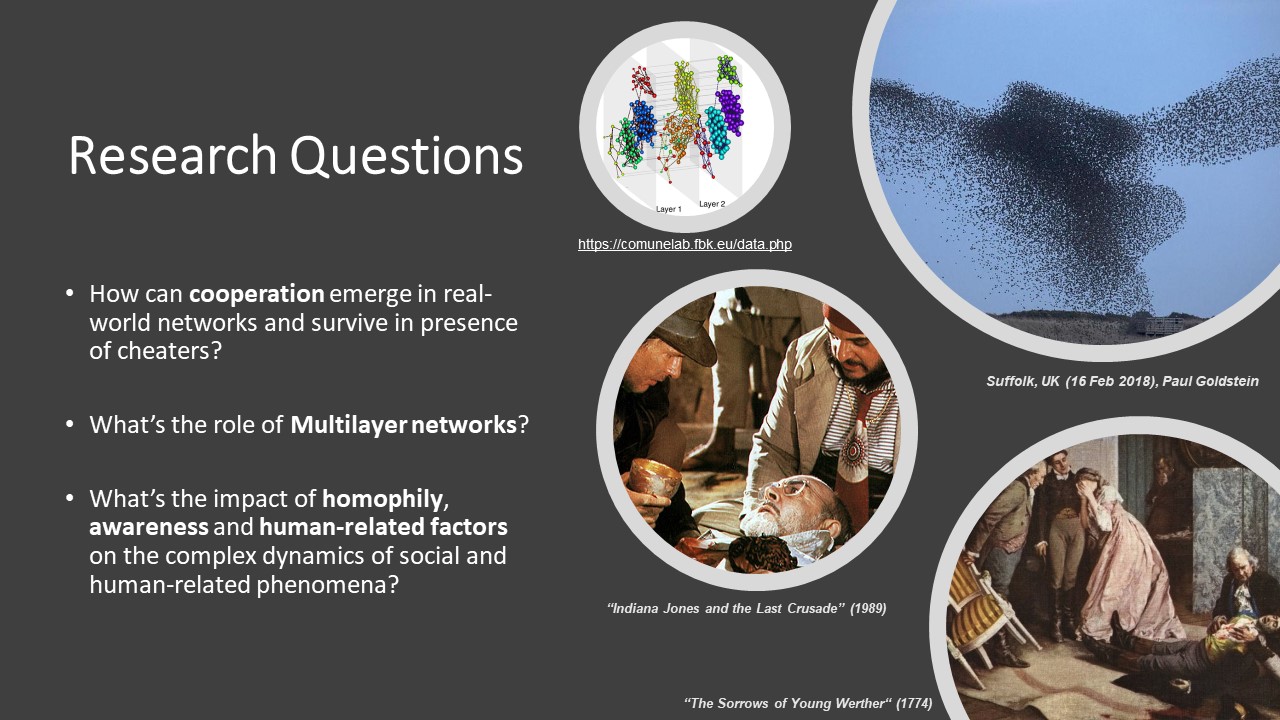

Questions

Here some of the research questions: if the first question is addressed by game theory, the second one is related to complex networks and network science, while the last one introduces bio-inspired and human-related factors incorporated in our modelling approaches.

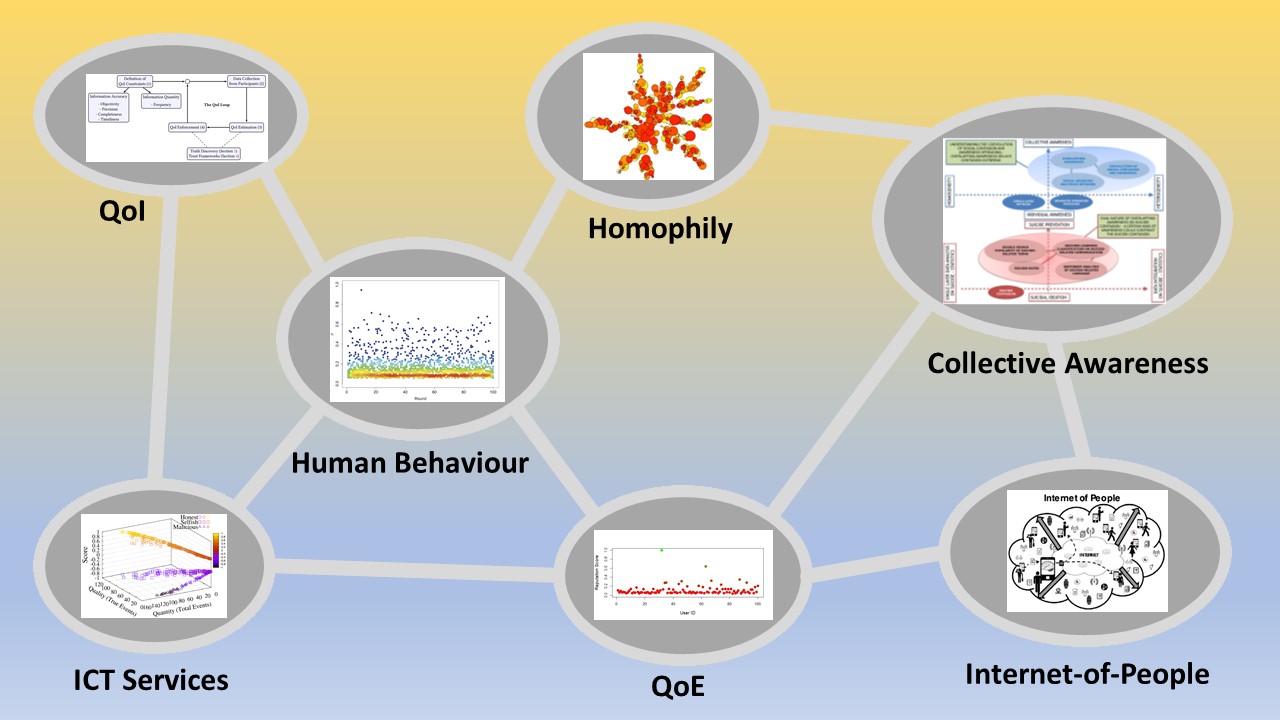

Concepts

These are the main concepts embodied in my methodologies and based on which the main statistical estimators have been defined.

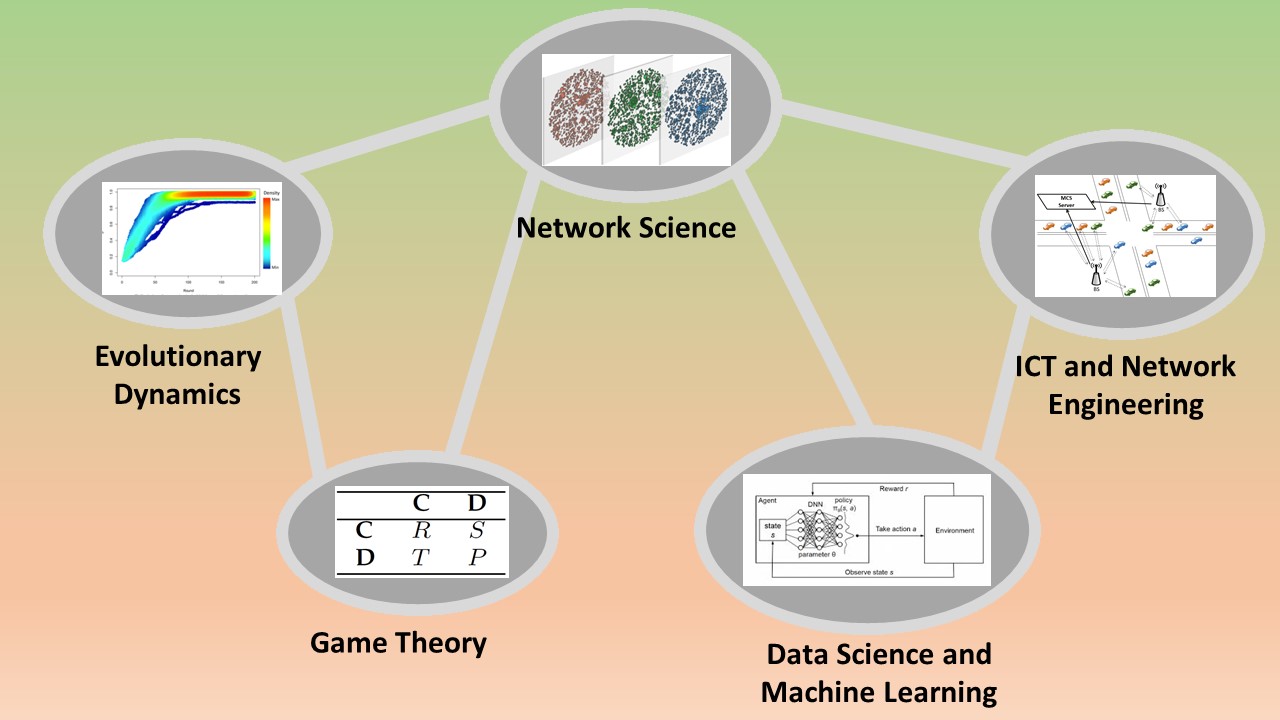

Methods

Here I summarise the methods used in my research activity and how these are linked with each other.

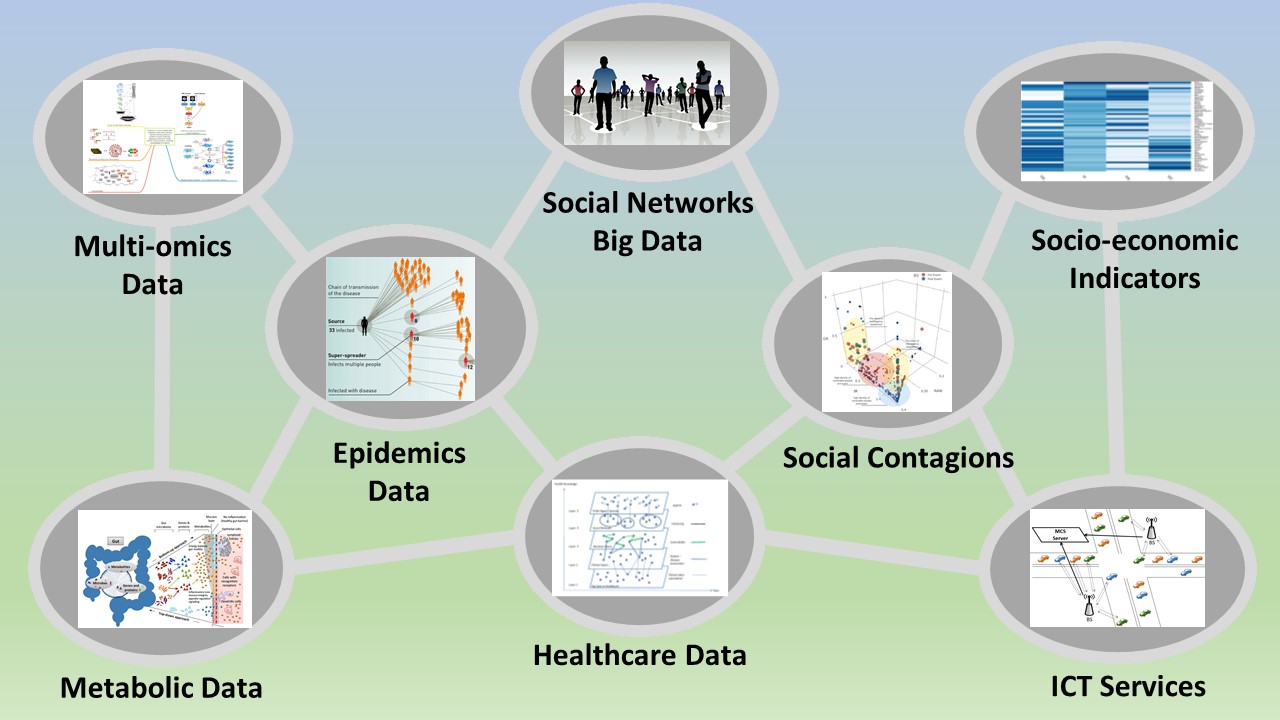

Data

Below the different types of multi-scale data used: from social networks data to user’s google searches and trends of some words, to socio-economic, metabolic or other healthcare data provided by the WHO on specific diseases or virus, or data from MCS services (e.g., Waze).

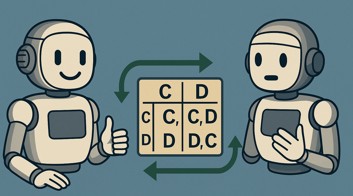

Recent work - FAIRGAME framework

By combining game-theoretic modelling with multiplex network representations, my work integrates data and knowledge, reduces system complexity, and enables the analysis of emergent behaviours and social phenomena in complex environments. This allows the design of innovative and beneficial strategies for society. Recently, I have focused on developing methodologies that bridge game theory, complex networks, and AI, with particular emphasis on multi-agent systems, LLM agents behaviours, and AI governance frameworks. This integration aims to unveil and manage emergent properties and recognise biases in multi-agent AI systems, ensure safe AI behaviour and fairness, and create robust mechanisms for AI agents’ interactions.

The recently published work on FAIRGAME (Framework for AI Agents Bias Recognition using Game Theory) at ECAI 2025 has been awarded with the ECAI Outstanding Paper Award. FAIRGAME is a flexible framework built to assess the cooperativeness of AI agents. By assigning distinct identities and personalities, FAIRGAME uses game-theoretic dynamics to observe how agents interact, make decisions, and align with predefined behavioral expectations. FAIRGAME provides policy-relevant insights into how AI agents behave in strategic, multi-agent, or regulatory environments, making it highly relevant for emerging AI safety, AI governance, and AI Act compliance. As governments and industries increasingly rely on autonomous systems, frameworks like FAIRGAME will play a crucial role in assessing the reliability of LLM behaviours, identifying hidden risks and biases, and informing safe deployment strategies.

PhD Supervision/Di Stefano's lab

The following are the PhD students I am currently supervising or co-supervising at Teesside University:

Paolo Bova, PhD student in Computer Science, working in the area of game theory, agent-based modelling, complex networks, AI safety, Regulatory Markets for AI, and design of incentive mechanisms.

Andrew Powell, PhD student in Computer Science, working in the area of game theory, agent-based modelling, and fairness evaluation in LLM systems, integrating game-theoretic modelling into LLM-RL pipelines and extending the game-theoretic bias detection frameworks.

I am in the supervisory team of Nikita Huber-Kralj, PhD student in Computer Science, focusing on adaptive configuration and curriculum learning of RL agents for microbial ecosystem simulation using LLMs.

I am the second supervisor of Ghazal Salimi, PhD in Civil Engineering, working on improved computational approaches to optimal generative environmental performance-based building design. Ghazal has been involved in the AKT2I in collaboration with with TaperedPlus where I was the PI, and we have been working on NLP methods to enhance construction design efficiency.

Moreover, I was the second supervisor of Stephen Richard Varey, who completed his PhD in Computer Science in June 2025, with a thesis entitled: “Knowledge Representation and Inference Techniques for use in Agent Societies”.

PhD Examination (Internal & External)

External examiner of 4 PhD theses (2022-2025) listed below:

Alessia Lucia Prete, PhD student in Information Engineering and Science, University of Siena (2025).

Andrew Fuchs, PhD student in Computer Science, University of Pisa (2024).

Pietro Bongini, PhD student in Computer Science, University of Florence (2022).

Pablo Spivakovsky Gonzalez, PhD student in Computer Science, University of Cambridge (2022).

Internal examiner of 3 PhD theses (2023-2025) at Teesside University listed below:

Delbaz Samadian, PhD student in Civil Engineering, Teesside University (2025).

Zainab Alalawi, PhD student in Computer Science, Teesside University (2024).

Markus Krellner, PhD student in Computer Science, Teesside University (2023).